Articles | November 17, 2016 | 4 min read

The Challenge with Developing ‘General Artificial Intelligence’

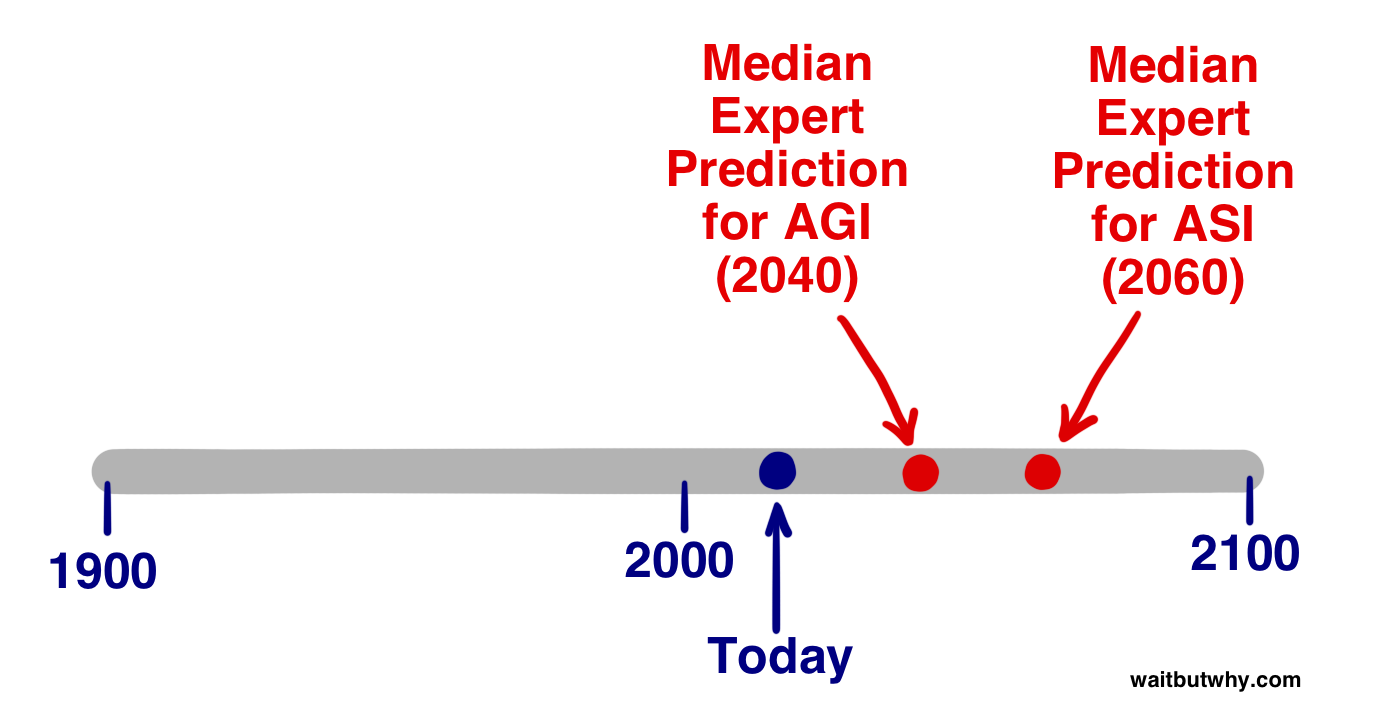

I have always been a keen follower of several ‘anorak’ or ‘geeky’ subjects. Two of these are Artificial Intelligence (AI) and General Research theory. Several months ago, I was reading an article about AI, how it has progressed over past few decades, and how it could progress in the future. As I was reading this article, I realised that there was a link between Artificial Intelligence and Epistemology which could, at best suggest that Strong or General AI is still a long way off – or worse – may never happen at all.

Before I get into explaining why general artificial intelligence is still a long way off, let me bring in some definitions to set the scene. These definitions will cover five terms which are key to my argument. (I appreciate that people will have different views on these terms but I believe that the definitions below are reasonably sound).

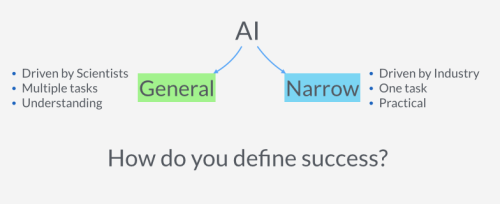

The first one is “intelligence” which is defined as one’s ability for logic, understanding, self-awareness, learning, emotional knowledge, planning, creativity and problem solving. The second is “Strong or General Artificial Intelligence” which is defined as the point where the machine’s intellectual capability is functionally equal to or greater than a human’s. This means the machine needs to be capable of logic, understanding, self-awareness, learning, emotional knowledge, planning, creativity and problem solving.

The third term is “Epistemology” which is defined as how knowledge is defined. Knowledge could be defined as a set of clear and standard rules which could be stored in a flowchart, computer program, a mathematical formula, etc. Alternatively knowledge could be less clear or standard such as people’s opinions, view and heir subjective understanding. This knowledge is often stored in people’s heads.

The next term is “Positivism” which is a stance within Epistemology. Positivism states that knowledge can only be scientifically verified or is only capable of logical or mathematic proof. In other words this Knowledge is rule-based – such as a computer program or mathematic formula.

The final term is “Interpretivism” which is an opposing stance to Positivism. Interpretivism argues that if we want to understand knowledge then we have to delve into the reasons and meanings which that has driven the outcomes or behaviours. In other works, knowledge is not rule based but based on people’s behaviours and how they interpret their world.

So what is the link between Epistemological and Artificial Intelligence? At the time of writing, all AI agents (or programs) are built on the traditional technology stack of computer programs running on a suite of hardware. Therefore at the most basic level, all AI agents are no more than computer programs, albeit very clever and innovative programs, but still only just computer programs. These programs consist of a clear set of rules and formulae which are consistently followed each time the program is run. This means that these agents generate knowledge at the Positivism end of the Epistemological spectrum.

However if Strong or General Artificial Intelligence is to be truly created then these AI agents need to be much functionality broader than just blindly following a set of pre-defined rules over and over again. They must be able to cope with understanding. They need to be self-aware. They need to be self- learning. They need to be able to have and cope with emotions. They need to be creative. Finally, they need to be able to solve problems.

This means if we truly want General Artificial Intelligence then Artificial Intelligence agents need to cover the full Epistemological spectrum from the ‘Positivism – Rule-Based’ end to the ‘Interpretivism – understanding the deep reasons and meanings behind behaviours’ end. However, the big constraint is that AI agents are currently built on computer technology which is Positivism or rule-based (i.e. because at their core they are computer programs). This means that these agents are currently unable to cope with the ‘Interpretivism’ requirement of Strong/General AI (such as being self-aware, being creative and so on). The end result is that unless a new method is devised for developing Artificial Intelligence Agents to cope with the full Epistemology spectrum then it may never be possible for them to generate true Strong/General AI.

This is where Machine Learning as an idea comes in to the picture. The ability of machines not to simply follow rules over and over again, but to learn from pre-defined behaviors, rules and their outcomes and iterate and experiment to generate different results – all the while learning from these outcomes. While Machine Learning grows and expands, we will truly be able to see whether General Artificial Intelligence can become a reality.

This is a Guest Post by Paul Taylor, who is a consultant specialising in ‘Change Management.’ He helps companies with product designs and launches and re-engineering major business processes. In his post, Paul brings in the larger thoughts about General Artificial Intelligence as much of our work moves in the direction of AI.